Note: The creation of this article on testing Content on Hover or Focus was human-based, with the assistance on artificial intelligence.

Explanation of the success criteria

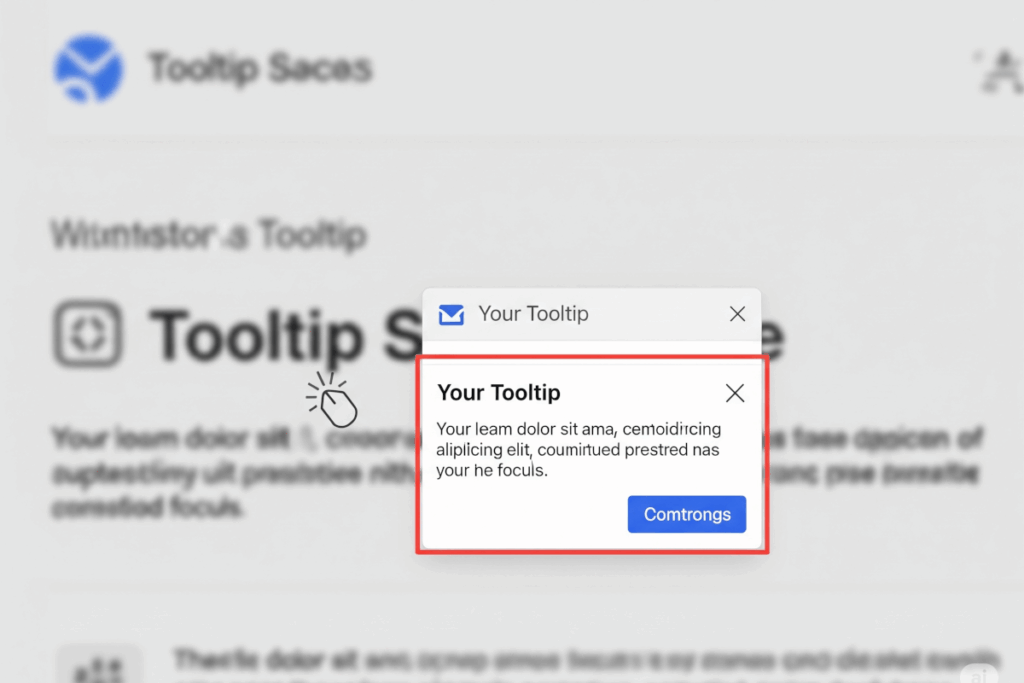

WCAG 1.4.13 Content on Hover or Focus is a Level AA conformance level Success Criterion. It ensures that when content appears on hover or keyboard focus and then disappears, the following must apply:

- Dismissible: Users can dismiss it without moving the pointer or focus—unless it shows an input error or doesn’t block other content.

- Hoverable: If it appears on hover, users can move the pointer over it without it vanishing.

- Persistent: It stays visible until the trigger is removed, the user dismisses it, or the info becomes irrelevant.

The exception: the visual presentation of the additional content is controlled by the user agent and is not modified by the author.

Who does this benefit?

- Users with low vision can view hover or focus content without lowering their preferred magnification.

- Those using enlarged cursors can still access hidden hover content.

- Users with low vision or cognitive disabilities have more time to process new content and stay focused.

- Users with low pointer accuracy can easily dismiss content triggered by accident.

Testing via Automated testing

Automated tools offer limited yet helpful support for testing WCAG 1.4.13 Content on Hover or Focus. They excel at quickly identifying potential trigger elements like tooltips or popovers, and can determine if content is programmatically linked to hover or focus states. This provides a crucial starting point for further inspection across a website due to their speed and consistency.

However, significant limitations exist. Automated tools cannot reliably assess critical interactive behaviors such as whether content is dismissible, persistent, or remains visible during pointer hover. These dynamic aspects require human observation to evaluate visual and timing-based changes. Furthermore, automated tools struggle to replicate specific user conditions or inputs that might reveal hidden content.

Testing via Artificial Intelligence (AI)

AI-based testing offers significant advantages for evaluating WCAG 1.4.13 (Content on Hover or Focus). It can swiftly scan code for common patterns in hover/focus-triggered content like tooltips and dropdowns, quickly identifying appearance and timing issues. This accelerates initial testing and helps catch potential problems early in development.

However, AI has notable limitations. It struggles to fully replicate human interaction, making it unreliable for testing nuanced user behaviors such as moving a pointer without hiding content or dismissing elements without focus shifts. Dynamic or custom content can also lead to misinterpretations, resulting in false positives or missed issues.

Testing via Manual testing

Manual testing is crucial for WCAG 2.1 Success Criterion 1.4.13: Content on Hover or Focus. It allows testers to directly observe how interactive elements like tooltips or dropdowns behave upon hover or focus. This helps verify that content is dismissible, hoverable, and persistent, catching subtle usability issues automated tools often miss.

However, manual testing can be time-consuming and demands skilled testers familiar with diverse user interactions. Ensuring consistent results can also be challenging due to variations in testing methods or platforms. Despite these drawbacks, manual testing remains essential for accurately evaluating this success criterion, as it relies on nuanced user interaction.

Which approach is best?

No single approach for testing 1.4.13 Content on Hover or Focus is perfect. However, using the strengths of each approach in combination can have a positive effect.

Automated tools can quickly flag common interactive elements like tooltips or popovers, but they fall short in verifying core compliance aspects such as dismissibility, hoverability, or persistence. They’re good for initial scans but can’t confirm full adherence. AI-based tools offer a more sophisticated analysis, potentially simulating interactions or visually analyzing overlays. However, their effectiveness can vary, and they often struggle with the dynamic, user-driven behaviors central to this criterion. This is where manual testing becomes indispensable. It allows testers to directly assess whether revealed content remains visible when hovered or focused, can be dismissed without shifting focus, and doesn’t obscure vital page elements. Testers should simulate diverse user interactions, including mouse and keyboard navigation, and consider magnification and assistive technologies to catch nuanced accessibility barriers.

By integrating these methods, you ensure comprehensive coverage and accurate validation of this crucial success criterion.