Note: The creation of this article on testing Headings and Labels was human-based, with the assistance of artificial intelligence.

Explanation of the success criteria

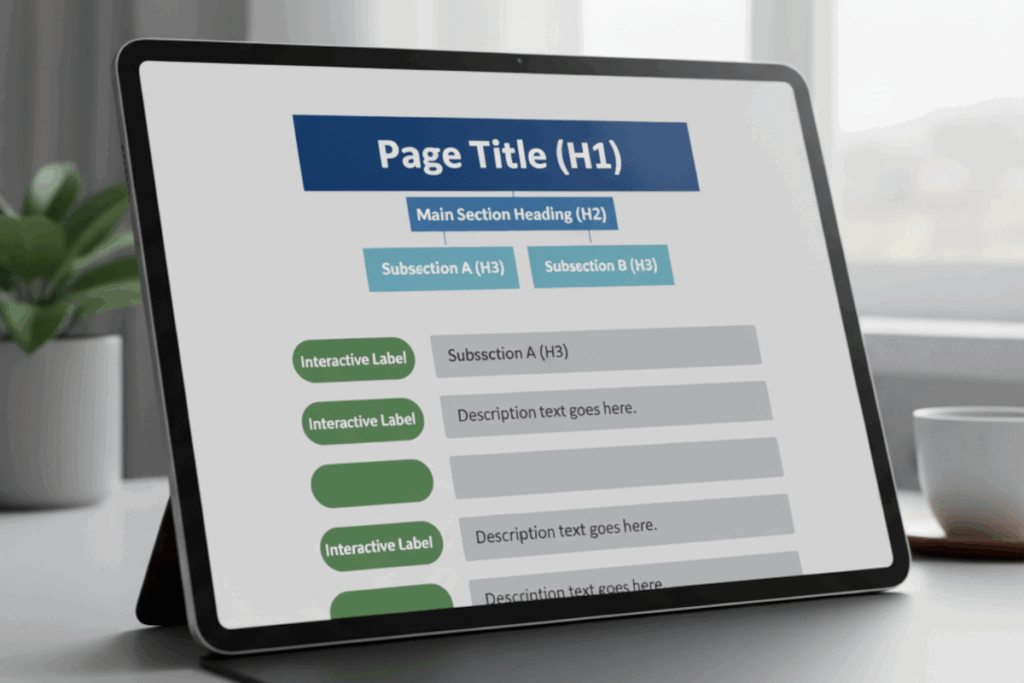

WCAG 2.4.6 Headings and Labels is a Level AA conformance level Success Criterion. It ensures that every heading and label clearly communicates the topic or purpose of the content it introduces. When these elements are well-crafted, users can instantly understand what follows, move efficiently through complex pages, and decide where to focus their attention.

Headings provide structure, they break content into logical, scannable sections that reflect its hierarchy and flow. For users of screen readers or keyboards, well-structured headings are essential for navigating without visual cues. For those with cognitive or learning disabilities, descriptive headings transform overwhelming information into manageable, meaningful parts. Labels, meanwhile, serve as precise signposts for input fields and interactive elements, guiding users to take the right action or provide the right information.

Clear, consistent, and descriptive headings and labels don’t just meet compliance, they create clarity for everyone. They’re a fundamental part of a usable, intuitive digital experience. Accessibility begins here, with communication that’s intentional, structured, and human-centered.

Who does this benefit?

This success criterion supports nearly every user, but it’s essential for:

- Screen reader users who rely on headings and labels to understand structure and purpose without sight.

- Keyboard-only users who use headings to navigate efficiently across a page.

- Users with cognitive or learning disabilities who benefit from organized, descriptive language that improves comprehension.

- Users with low vision who depend on visual scanning aided by consistent heading levels.

- Speech recognition users who can use headings and labels as voice commands for quick navigation.

- All users, who gain from clearer organization, better readability, and a more seamless experience.

Testing via Automated testing

Automation testing forms the first layer of accessibility assurance. It identifies structural issues such as missing heading tags, skipped levels, or unlabeled form elements. These scans provide rapid, large-scale coverage, perfect for establishing a baseline. However, automated tools can’t judge whether headings or labels make sense, they can confirm presence, not purpose.

Testing via Artificial Intelligence (AI)

AI brings intelligence to the process. Using natural language processing, AI can interpret meaning and evaluate whether headings and labels are contextually relevant, consistent, and semantically aligned with content. It detects vague terms like “Click here” or “Learn more” and identifies patterns that may hinder understanding. While AI adds valuable interpretive depth, it still struggles with human nuances, tone, intent, and contextual judgment.

Testing via Manual Testing

Manual testing remains the gold standard. Human evaluators assess whether headings logically guide the user journey and whether labels truly communicate their purpose. They verify that assistive technologies announce these elements in a meaningful way, ensuring real-world usability. While time-intensive, this hands-on review captures clarity, flow, and empathy that no machine can replicate.

Which approach is best?

No single testing method can fully validate WCAG 2.4.6. Automated, AI, and manual testing each bring unique strengths to evaluating WCAG 2.4.6 Headings and Labels, but it’s their integration that creates a truly robust accessibility strategy.

Automated scans serve as the first line of defense, detecting structural inconsistencies that might otherwise go unnoticed, such as skipped heading levels, unlabeled form fields, or headings used purely for visual styling rather than semantic meaning. These tools excel at scale, allowing teams to quickly identify patterns and systemic issues across hundreds or thousands of pages.

AI tools then elevate the process from structural validation to semantic understanding. Leveraging natural language processing and machine learning, AI can analyze whether headings and labels are not only present but contextually appropriate. It identifies vague, repetitive, or misleading phrasing, such as “Click here” or “Learn more,” and detects when headings fail to align with the actual topic or intent of the content beneath them. This layer brings nuance, enabling organizations to measure not just compliance, but communication quality.

Finally, manual testing provides the essential human perspective. Expert testers evaluate whether the headings logically guide users through the page, whether labels clearly describe their associated controls, and how these elements are perceived by assistive technologies. They assess tone, flow, and intent, dimensions that no algorithm can fully capture. Manual testing ensures that the experience is not merely accessible on paper, but intuitive and meaningful in practice.

Together, these three approaches form a comprehensive, human-centered methodology. Automation delivers reach, AI brings contextual intelligence, and manual testing ensures authenticity. The result is more than technical compliance, it’s digital communication that is clear, purposeful, and truly inclusive for every user.