Note: The creation of this article on testing Motion Actuation was human-based, with the assistance of artificial intelligence.

Explanation of the success criteria

WCAG 2.5.4 Motion Actuation is a Level A conformance level Success Criterion. It addresses an often-overlooked barrier in digital accessibility: reliance on motion-based interactions.

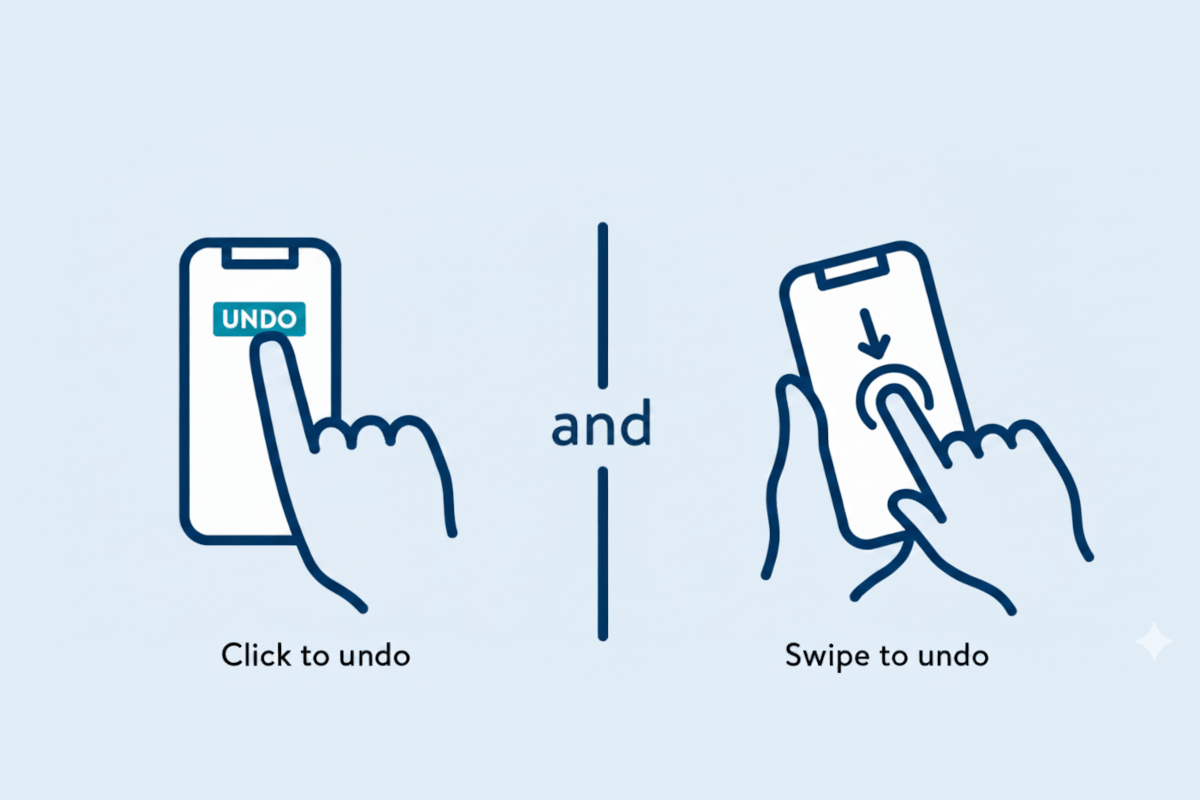

Many modern websites and applications incorporate gestures like shaking, tilting, or other device movements to trigger actions, but these interactions can unintentionally exclude users who cannot perform them. Motion Actuation ensures that all interactive functionality can be completed without relying on these physical gestures. To achieve compliance, designers and developers must provide robust alternative methods, such as on-screen buttons, controls, or other non-motion triggers, so that every user can engage with content independently, efficiently, and reliably.

Who does this benefit?

- Users with limited mobility or dexterity: those who cannot perform gestures like shaking, tilting, or other motion-based interactions.

- Users with tremors or motor control challenges: who may trigger unintended actions if motion gestures are required.

- Assistive technology users: individuals relying on devices like switches, eye trackers, or adaptive controllers that cannot perform motion gestures.

- Older adults or users with temporary injuries: who may have difficulty performing motion-based interactions.

- Anyone in an environment where motion gestures are impractical: for example, when holding a device in a fixed position.

Testing via Automated testing

Automated testing offers rapid, large-scale scanning of code to detect motion-based triggers and flag missing alternatives. Its speed and efficiency make it ideal for identifying obvious gaps, but automation cannot verify the real-world functionality or usability of alternatives.

Testing via Artificial Intelligence (AI)

AI-based testing adds contextual intelligence, simulating user interactions to identify where motion gestures may create barriers and suggesting potential solutions. While AI can detect patterns and predict issues with greater nuance than automation alone, it still cannot fully replicate the human experience and may miss subtle usability challenges.

Testing via Manual Testing

Manual testing, in contrast, involves direct evaluation by real users or testers interacting with the system via alternative controls, adaptive devices, or other non-motion inputs. This approach provides the most reliable insight into practical usability, uncovering issues that automated or AI-driven methods might overlook. The trade-off is that manual testing is time-intensive and less scalable, but it delivers the highest confidence that a site truly meets the intent of Motion Actuation.

Which approach is best?

Relying on a single testing method is never sufficient to ensure full WCAG 2.5.4 Motion Actuation compliance. A hybrid approach to testing WCAG 2.5.4 Motion Actuation combines the speed of automated testing, the contextual insight of AI-based testing, and the thorough validation of manual testing to ensure comprehensive coverage.

The process begins with automated testing, which quickly scans websites or applications to detect instances where motion gestures are required and flags missing or improperly implemented alternatives. This step establishes a solid baseline, revealing structural and technical gaps at scale. Automated testing excels at efficiency, enabling teams to cover large codebases rapidly and identify obvious compliance failures that could otherwise go unnoticed.

Building on this foundation, AI-based testing introduces contextual intelligence by simulating realistic user interactions. It assesses whether alternative controls are not only present but functional and contextually appropriate, highlighting subtle barriers that automation alone might miss. AI can model how users with different abilities interact with the system, offering insights into potential friction points and providing actionable recommendations for improvement.

Finally, manual testing validates the real-world effectiveness of these alternatives by engaging actual users or accessibility specialists. Testers employ switches, adaptive devices, or other non-motion inputs to confirm that actions can be reliably completed without motion gestures. This hands-on evaluation uncovers nuances in usability and ensures that the digital experience works as intended for all users, including those with limited mobility or relying on assistive technologies.

By integrating automated, AI-based, and manual testing, a hybrid strategy delivers a comprehensive, high-confidence approach. It not only identifies technical deficiencies but also captures contextual subtleties and real-world usability challenges, ensuring that digital content is truly inclusive, functional, and empowering for every user.