Note: The creation of this article on testing Change on Request was human-based, with the assistance on artificial intelligence.

Explanation of the success criteria

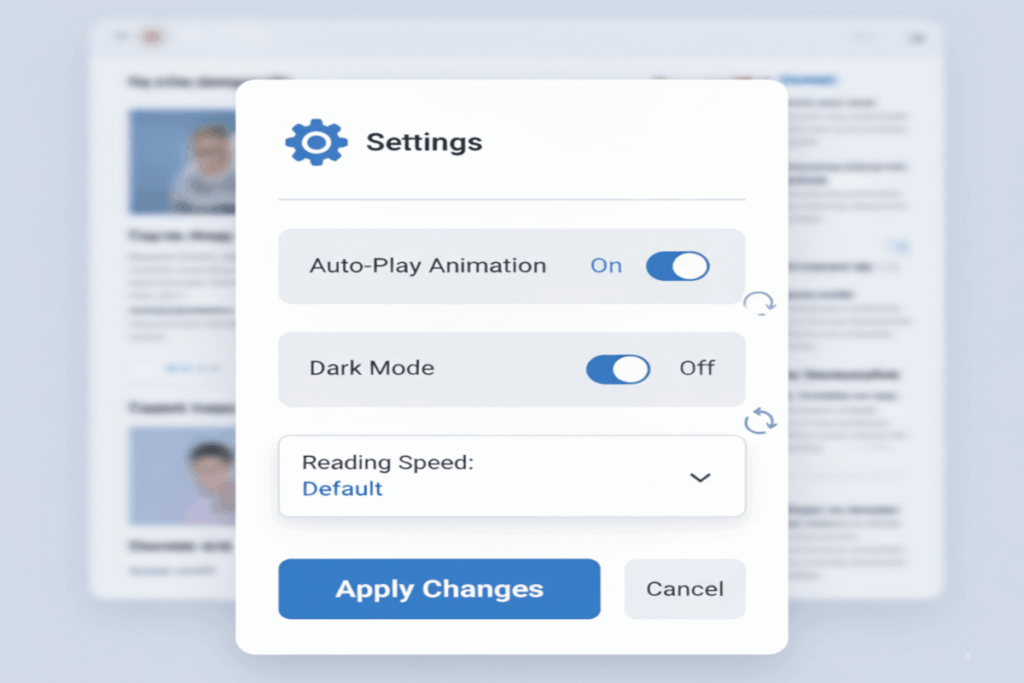

WCAG 3.2.5 Change on Request is a Level AAA conformance level Success Criterion. It is focused on user autonomy and control. It emphasizes that any changes to the user interface or content, such as style adjustments, dynamic content updates, or functionality modifications, should occur only when explicitly requested by the user.

This principle ensures that users are not surprised or disrupted by automatic changes, preserving context and reducing cognitive load. For digital accessibility professionals, this criterion reinforces the importance of designing experiences that respond to user intent rather than forcing changes, fostering predictability, and respecting diverse interaction needs. Implementing Change on Request promotes a more inclusive digital environment where users feel empowered, confident, and in control of their interactions.

As a Level AAA requirement, this Success Criterion is considered aspirational rather than mandatory. It goes beyond the essential A and AA conformance levels, serving as a model for organizations aiming to deliver exceptional accessibility and linguistic inclusivity. For content creators and organizations committed to true digital equity, addressing pronunciation accessibility is not just a compliance measure, it’s a statement of excellence in communication design.

Who does this benefit?

- Users with cognitive disabilities: They gain predictability and control, avoiding disorientation from unexpected interface changes.

- Users with motor or interaction challenges: They can request changes on their terms, reducing effort and frustration.

- Assistive technology users: Ensures content and UI updates occur only when anticipated, improving compatibility and workflow.

- Content creators and designers: Clear testing highlights areas where user control can be enhanced, guiding more inclusive design decisions.

- Organizations and compliance teams: Testing demonstrates proactive accessibility practices, reducing legal risk and improving overall user experience.

- All users: Even those without disabilities benefit from predictable, user-driven interactions that reduce surprise and increase satisfaction.

Testing via Automated testing

Automated testing excels at rapidly scanning large volumes of content and code to detect potential triggers of automatic changes, such as scripts that update interfaces or alter content without user input. Its strength lies in speed and consistency, allowing teams to identify structural patterns or common coding practices that may violate the criterion. However, automation struggles to interpret user intent and context, meaning it can flag issues that aren’t truly impactful or miss subtle instances where a change occurs without explicit request.

Testing via Artificial Intelligence (AI)

AI-based testing adds an interpretive layer, simulating user interactions and predicting how interface changes could affect real-world experiences. AI can analyze dynamic behavior, evaluate the likelihood of user confusion, and prioritize high-risk interactions for remediation. The challenge is that AI predictions rely heavily on training data and context interpretation, so unusual or highly customized interfaces may yield false positives or negatives, requiring human judgment to validate outcomes.

Testing via Manual testing

Manual testing remains the gold standard for confirming true accessibility impact. Skilled testers can interact with interfaces using a variety of assistive technologies, keyboard-only navigation, and cognitive simulations to verify whether changes truly respect user intent. The downside is that manual testing is time-consuming and resource-intensive, particularly on complex applications with frequent dynamic updates.

Which approach is best?

In practice, a hybrid approach, leveraging automated scans to identify potential issues, AI to contextualize and prioritize them, and manual testing to validate real-world impact, provides the most reliable, actionable assessment of Change on Request compliance. This method ensures organizations not only meet WCAG requirements but also deliver genuinely user-centric, predictable, and controllable experiences.

The process begins with automated testing, which rapidly scans code and content to identify potential triggers for automatic changes, such as scripts, dynamic content updates, or interface modifications that could occur without user initiation. This stage provides a broad overview, flagging patterns and high-risk areas across large-scale applications, giving teams a foundation for targeted analysis.

Next, AI-based testing evaluates these flagged instances in context, simulating realistic user interactions to determine whether changes could disrupt user control or cognitive flow. AI can prioritize issues based on predicted impact, identify nuanced patterns that automation might miss, and even suggest strategies for mitigating unintended interface changes. By leveraging AI’s predictive capabilities, teams can focus their efforts on high-risk areas, improving both efficiency and accuracy.

Finally, manual testing validates the findings from both automated and AI-assisted stages. Expert testers engage with the interface using keyboard navigation, assistive technologies, and cognitive simulations to confirm that changes occur solely at the user’s request and that the experience remains predictable and manageable. Manual testing also captures edge cases, contextual subtleties, and real-world interaction scenarios that no algorithm can fully anticipate.

By integrating these three methodologies, organizations achieve a comprehensive, user-centric assessment of Change on Request compliance, ensuring interfaces respect user autonomy, reduce cognitive load, and deliver an inclusive, accessible digital experience. This hybrid strategy not only ensures adherence to WCAG 3.2.5 but also strengthens the overall quality, predictability, and usability of digital products.

Related Resources

- Understanding Success Criterion 3.2.5 Change on Request

- mind the WCAG automation gap

- Providing a mechanism to request an update of the content instead of updating automatically

- Implementing automatic redirects on the server side instead of on the client side

- Using an instant client-side redirect

- Using meta refresh to create an instant client-side redirect

- Using the target attribute to open a new window on user request and indicating this in link text

- Using progressive enhancement to open new windows on user request

- Using an onchange event on a select element without causing a change of context

- Opening new windows and tabs from a link only when necessary