Note: The creation of this article on testing Accessible Authentication (Minimum) was human-based, with the assistance on artificial intelligence.

Explanation of the success criteria

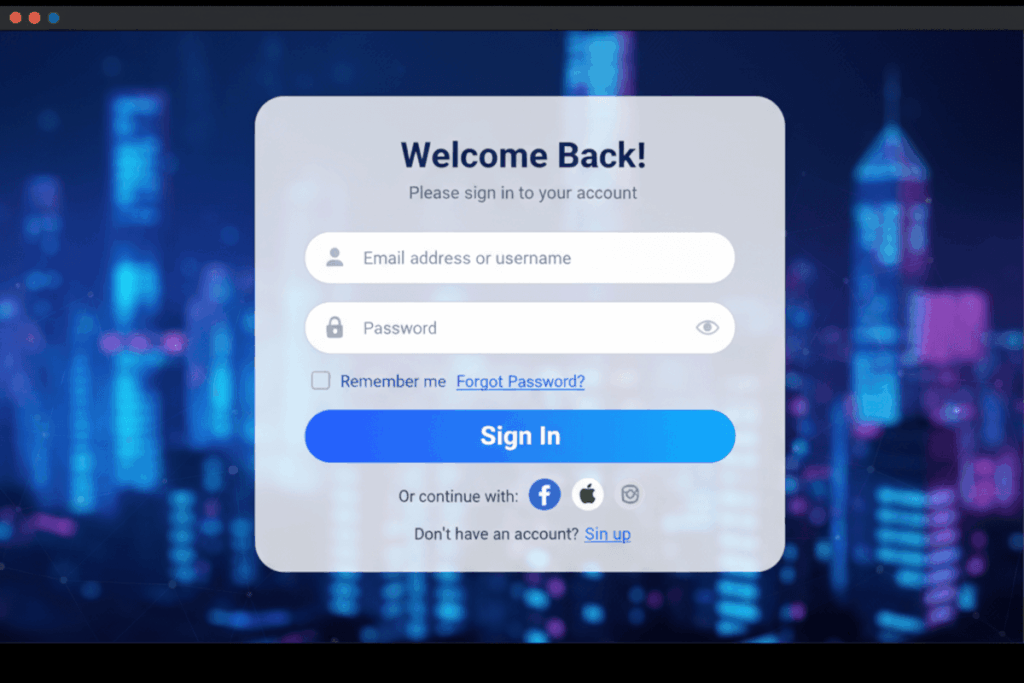

WCAG 3.3.8 Accessible Authentication (Minimum) is a Level AA conformance level Success Criterion. It emphasizes removing cognitive barriers from the authentication process. It ensures users can log in, prove their identity, or complete security steps without needing to recall, transcribe, or manipulate complex information, tasks that often exclude people with cognitive or memory disabilities.

The intent is simple but powerful: make authentication equitable. This criterion allows users to authenticate using methods that do not depend on memory tests (like remembering passwords or solving puzzles), pattern recognition, or time-based responses. Instead, systems should enable accessible alternatives such as copy-paste password managers, device-based authentication, or saved credentials. By implementing accessible authentication, organizations not only comply with WCAG but also embrace a more inclusive digital experience, one where security and usability coexist rather than compete.

Who does this benefit?

- Users with cognitive disabilities benefit from authentication processes that don’t rely on memory, pattern recognition, or problem-solving under pressure.

- Users with memory impairments gain equitable access through alternatives like password managers, saved credentials, or biometrics.

- Users with motor impairments experience fewer physical barriers when authentication supports tools like autofill or device-based sign-in.

- Users with language and learning differences can authenticate without struggling through complex or confusing verification tasks.

- Older adults enjoy smoother access experiences when systems avoid requiring memorization or time-limited responses.

Testing via Automated testing

Automated testing offers efficiency and consistency, quickly identifying technical patterns such as inaccessible form fields, missing labels, or blocked password manager integrations. However, automation falls short when evaluating the user experience of authentication, whether the process truly avoids cognitive barriers or supports alternative input methods. It can confirm that fields exist, but not whether users can successfully complete authentication without memory or recognition challenges.

Testing via Artificial Intelligence (AI)

AI-based testing adds a layer of intelligence, simulating user interactions and detecting friction points that might cause cognitive overload or accessibility breakdowns. AI can analyze user flows, flag confusing or multi-step verification paths, and detect inaccessible CAPTCHA patterns. Still, AI tools often rely on heuristic models that may miss subtle contextual cues, like whether an instruction feels cognitively demanding or if a “simple” verification question still requires recall. Without human oversight, AI risks misjudging user effort and overestimating accessibility.

Testing via Manual testing

Manual testing remains essential. Human testers, especially those with lived experience of cognitive or motor disabilities, can reveal the authentic usability of authentication flows. They can determine whether autofill and password managers work smoothly, whether alternative authentication options (like biometrics or email links) are provided, and whether instructions are truly understandable. The trade-off, of course, is time and scalability. Manual testing demands expertise, planning, and real-world validation, but it delivers the insight automation and AI can’t: how it feels to authenticate inclusively.

Which approach is best?

A hybrid approach to testing WCAG 3.3.8 Accessible Authentication (Minimum) blends automation, AI, and manual expertise to create a truly inclusive, verifiable authentication experience. The process begins with automated testing to establish a technical baseline, verifying semantic markup, ensuring form controls are properly labeled, checking compatibility with password managers, and confirming that authentication workflows don’t block autofill or copy-paste functions. These automated checks efficiently surface structural and code-level issues that could hinder accessible authentication, forming a foundation for deeper evaluation.

Next, AI-based testing expands that baseline by simulating real-world cognitive demands. AI can model authentication flows, predicting where users might struggle with memory-based or recognition-based tasks. It can identify when verification challenges, such as CAPTCHA images, puzzles, or time-limited one-time codes, impose unnecessary cognitive barriers. By analyzing behavior patterns and decision points, AI provides early insights into whether an authentication process creates friction for users with diverse abilities.

Finally, manual testing closes the loop with human validation. Testers with accessibility expertise, and ideally, lived experience, engage directly with the authentication journey. They verify that alternative authentication methods (like device-based login, passwordless entry, or magic links) function as intended, and that users can navigate without recalling or manipulating complex information. Manual testers also confirm the overall cognitive flow: are instructions clear, feedback immediate, and options equitable?

When combined, these three approaches deliver comprehensive assurance: automation ensures technical readiness, AI anticipates cognitive barriers, and manual testing validates real-world usability. This hybrid strategy transforms compliance into confidence, demonstrating not just that authentication meets accessibility standards, but that it feels accessible to every user who needs to log in securely and independently.