Note: The creation of this article on testing Error Suggestion was human-based, with the assistance on artificial intelligence.

Explanation of the success criteria

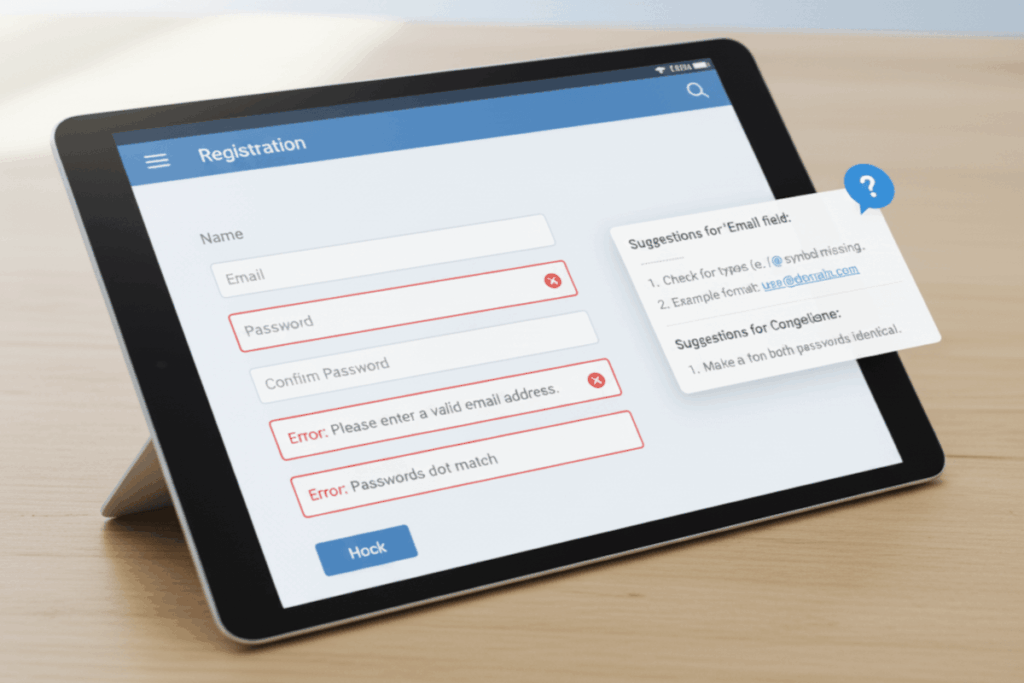

WCAG 3.3.3 Error Suggestion is a Level AA conformance level Success Criterion. It is designed to improve usability and reduce user frustration by helping people recover from mistakes during data entry or interaction. It requires that when an input error is detected, the system provides meaningful suggestions to correct it, unless doing so would compromise security or purpose.

This criterion reinforces the principle that accessibility is not only about preventing barriers but also about guiding users toward success. Effective error suggestion involves clear, actionable feedback, such as indicating the required format for a date, or highlighting a missing field with a message explaining what needs to be entered. When done well, it transforms forms and input processes from potential friction points into supportive, inclusive experiences that embody accessibility maturity.

Who does this benefit?

Thoughtful implementation benefits everyone, particularly users with cognitive, learning, or language disabilities, by reducing cognitive load and increasing confidence during digital interactions.

- Users with cognitive or learning disabilities benefit from clear, actionable guidance that reduces confusion and helps them correct mistakes independently.

- Users with language or literacy challenges gain confidence when error messages explain what went wrong in plain, understandable language.

- Screen reader users rely on accessible, programmatically associated error messages to identify and resolve input issues without visual cues.

- Keyboard-only and assistive technology users benefit when error suggestions are easy to navigate and interact with, maintaining workflow efficiency.

- All users appreciate clear, contextual error feedback that saves time and prevents frustration, improving trust and engagement.

Testing via Automated testing

Automated testing offers strong efficiency for identifying technical indicators of error handling, such as the presence of aria-describedby attributes or error message containers tied to input fields. However, automation quickly reaches its limits, it cannot assess whether an error message is meaningful, actionable, or contextually appropriate to the user’s task. Automation is best viewed as a foundational check, not a full evaluation.

Testing via Artificial Intelligence (AI)

AI-based testing expands on automation by interpreting natural language patterns and user flows. AI can analyze error messages for clarity, tone, and linguistic hints of helpfulness, detecting issues like vague guidance (“invalid entry”) or missing instructions. It also simulates user interactions, predicting whether suggestions lead to successful corrections. Yet, AI still struggles with nuance, it cannot fully grasp intent, cultural context, or the emotional experience of encountering an error. False positives or misjudged tone remain challenges, requiring human oversight to validate findings.

Testing via Manual testing

Manual testing remains the most critical layer. Skilled testers can evaluate whether error suggestions genuinely assist the user, align with design intent, and support users with cognitive or language differences. Manual testers assess readability, clarity, timing, and contextual appropriateness, factors no automated tool can reliably judge. However, manual testing is time-intensive and prone to inconsistency if not guided by clear methodology.

Which approach is best?

The most effective approach blends these methods: automation for coverage, AI for intelligent insight, and manual testing for empathy and accuracy. Together, they create a robust validation strategy that ensures error suggestions not only exist but truly empower users to succeed.

The strategy begins with automated testing to establish a technical baseline, detecting the presence of error states, ARIA associations, and programmatic links between inputs and error messages. These fast, repeatable checks ensure coverage across large digital ecosystems and help identify where error handling exists or is missing entirely.

From there, AI-based testing takes analysis further by evaluating the quality of the feedback. Through natural language processing, AI can assess whether an error message provides a corrective path or merely restates the problem, flagging messages that lack clarity, precision, or inclusiveness. AI can also simulate user input variations to test how responsive and adaptive the error suggestions are under real-world conditions.

The third layer, manual testing, is where human judgment validates and enriches these insights. Testers interact directly with the interface to determine whether the suggestions genuinely assist users in completing their tasks. They assess tone, timing, visibility, and cognitive load, ensuring that guidance is actionable and accessible to users with varying abilities, languages, and assistive technologies. Manual evaluators can also test emotional usability, whether users feel supported rather than penalized when errors occur.

When unified, this hybrid model delivers both breadth and depth: automation ensures technical compliance, AI enhances linguistic and behavioral analysis, and human testing brings empathy and lived experience to the forefront. The result is not just conformance, but true usability, a digital environment where error suggestions guide, empower, and build user trust at every step.

Related Resources

- Understanding Success Criterion 3.3.3 Error Suggestion

- mind the WCAG automation gap

- Writing Accessible Form Messages

- Providing a text description when user input falls outside the required format or values

- Providing suggested correction text

- Using aria-alertdialog to Identify Errors

- Providing a text description when the user provides information that is not in the list of allowed values

- Indicating when user input falls outside the required format or values in PDF forms

- Creating a mechanism that allows users to jump to errors

- Providing client-side validation and alert

- Providing client-side validation and adding error text via the DOM