Note: The creation of this article on testing Images of Text was human-based, with the assistance on artificial intelligence.

Explanation of the success criteria

WCAG 1.4.5 Images of Text is a Level AA conformance level Success Criterion. Text should be displayed using actual text, not images of text, so it can be resized, styled, and interpreted by assistive technologies. Exceptions include images of text essential for the content (like logos) or when a particular visual presentation is necessary and cannot be achieved with real text.

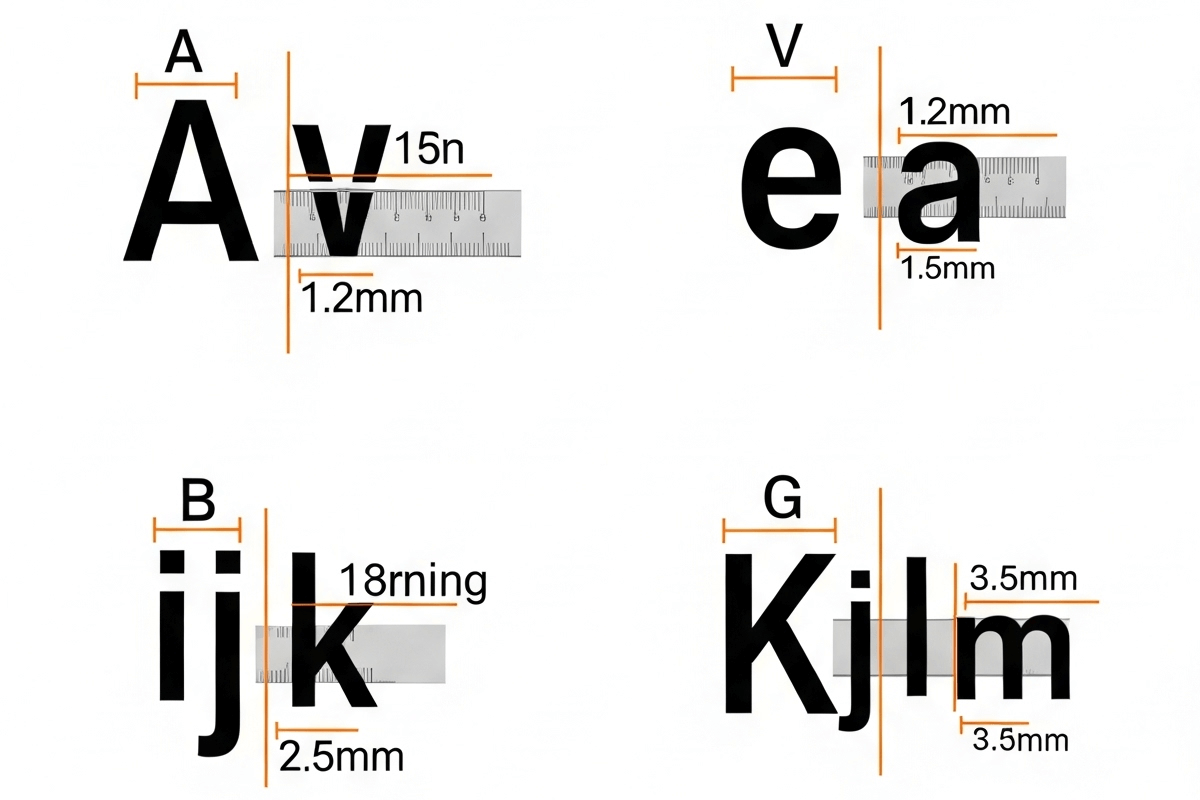

An example etched in my mind is a financial website which has a link to provide feedback.

If a user with a vision impairment needed to zoom in to increase the size of the content, this text would be more difficult to read. Users with cognitive impairments might have difficulty in deciphering the letters to read the text.

If this was standard text, no matter what zoom setting was used by the user, the text would be clear and easily read.

Contact Support

Contact Support

Who does this benefit?

- People with low vision (who may have trouble reading the text with the authored font family, size and/or color).

- People with visual tracking problems (who may have trouble reading the text with the authored line spacing and/or alignment).

- People with cognitive disabilities that affect reading.

Testing via Automated testing

Automated tools offer a fast, consistent way to scan pages for tags and background images that may contain text, flagging common issues like image-based buttons or headers using file names, alt text, or CSS clues.

However, automated testing struggles to recognize whether an image visually resembles text or if it’s essential for design. It can’t detect stylized or embedded text, often misclassify decorative elements, and it rarely uses reliable OCR. While helpful for initial detection, automated testing alone can’t fully evaluate WCAG 1.4.5 due to its lack of visual and contextual insight.

Testing via Artificial Intelligence (AI)

AI-based testing can detect text within images by analyzing layout, font styles, and visual content, going beyond what traditional automated tools can catch. It efficiently scans large volumes of content like PDFs, infographics, and web pages, identifying embedded text and even distinguishing between decorative images, logos, and meaningful content. With OCR, AI can extract text from images and compare it to nearby HTML to check for real-text alternatives.

However, AI-based testing can misidentify stylized text, struggle with complex backgrounds or fonts, and often requires human judgment to determine if text-in-image use is essential or replaceable. It may also flag valid exceptions like logos and perform poorly with unfamiliar designs, layouts, or languages.

Testing via Manual testing

Manual testers can judge whether images of text are decorative or essential and if they can be replaced with real text. They assess if the visual style is necessary for branding or design, like logos or stylized titles, and spot nuanced uses (quotes, infographics) that automated tools often miss. Manual testing also verifies accessibility with screen readers and keyboard navigation when real text isn’t used.

However, this process is time-consuming, especially on image-heavy sites, and judgments about what’s “essential” can vary, requiring deep WCAG knowledge. With limited automated support for this criterion, manual review remains crucial but labor-intensive.

Which approach is best for testing Images of Text?

No single approach for testing WCAG 1.4.5 Images of Text is perfect. However, using the strengths of each approach in combination can have a positive effect.

The best approach to testing this combines automated, AI-based, and manual methods. Automated tools provide a fast first pass, flagging obvious cases where images contain text, such as img tags with text-like alt attributes or CSS background images. AI-based tools enhance this by using visual recognition to detect stylized or embedded text not coded as actual text. However, both methods fall short in understanding context or design intent. Manual testing is essential to determine whether an image of text is decorative, essential for presentation, or could be replaced with real text. By layering these methods, automated for speed, AI for visual breadth, and manual for context and conformance, you ensure a thorough and accurate evaluation.