Note: The creation of this article on testing Location was human-based, with the assistance of artificial intelligence.

Explanation of the success criteria

WCAG 2.4.8 Location is a Level AAA conformance level Success Criterion. It represents the highest standard of accessibility, ensuring that every user can orient themselves within a website or application through clear navigational context. Whether through visible breadcrumbs, descriptive page titles, or highlighted navigation states, these cues anchor users in the digital landscape. They transform sprawling websites into logical, navigable ecosystems.

While Level AAA is aspirational rather than mandatory, striving for 2.4.8 Location signals a deeper commitment to accessibility. Organizations that embrace this standard demonstrate that accessibility is not just a compliance checkbox, it is a strategic priority that puts real user experiences first.

Who does this benefit?

- People with cognitive disabilities depend on consistent cues to remain oriented and reduce confusion.

- Users with low vision rely on programmatic indicators, not visual layout, to understand structure.

- Screen reader users benefit from breadcrumbs, page titles, and headings that clarify position and hierarchy.

- Keyboard-only users need logical sequencing and clear focus states to know their place in the journey.

- All users gain a sense of predictability and ease—particularly valuable on large or content-heavy websites.

Testing via Automated testing

Automated testing serves as the foundation for scale and consistency. It can quickly identify the presence of key navigational structures, breadcrumbs, page titles, and ARIA landmarks, that suggest location awareness. However, automation only measures existence, not effectiveness. A breadcrumb coded incorrectly or hidden visually may pass an automated check while failing real users. Automation sets the baseline but cannot verify clarity or usability.

Testing via Artificial Intelligence (AI)

AI-based testing bridges that gap by introducing contextual and visual intelligence. AI tools analyze layout patterns, visual consistency, and the relationship between navigation elements across pages. They can detect subtle inconsistencies, like a misplaced breadcrumb or an inactive highlighted state, that affect orientation. Yet, even AI lacks full human understanding of intent. It may recognize a pattern but not discern whether that pattern meaningfully conveys structure to users.

Testing via Manual Testing

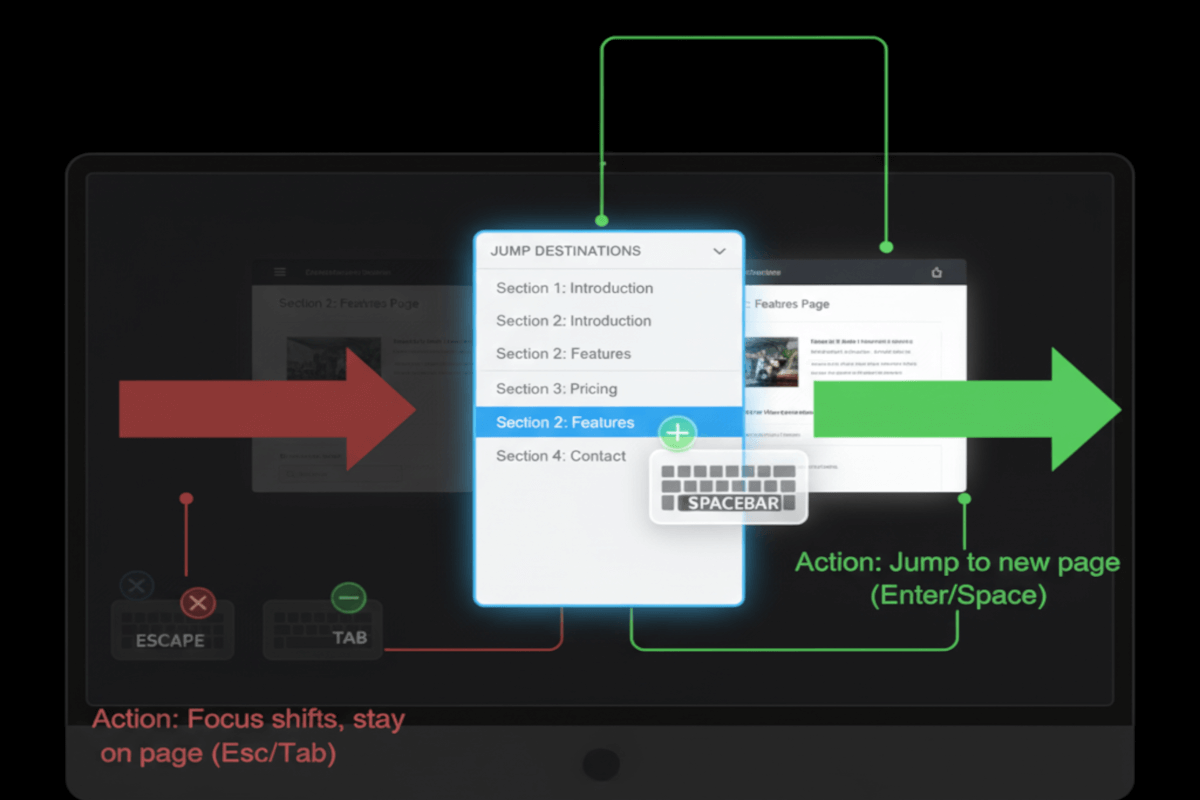

Manual testing delivers the human insight that technology cannot replicate. Testers navigate as real users, using screen readers, keyboards, and visual inspection, to assess whether navigation cues are perceivable, understandable, and genuinely helpful. They evaluate if breadcrumbs reflect true hierarchy, if highlighted menu items make sense, and if the user can easily reorient after navigating deep into content. Though more time-intensive, this human touch ensures the result is not just compliant, but truly user-centered.

Which approach is best?

No single method captures the full picture of WCAG 2.4.8 Location. A hybrid approach, combining automation, AI, and manual testing, creates a comprehensive framework for verification and validation. Automation provides broad coverage, AI interprets patterns and presentation, and manual testing validates meaning and usability.

The process begins with automated testing to perform broad, foundational scans across the site. Automated tools can detect technical markers like the presence of breadcrumbs, consistent page titles, ARIA landmarks, or navigation components, helping identify pages that may lack these key elements. This stage establishes a baseline and highlights structural inconsistencies or missing location indicators at scale.

Next, AI-based testing provides visual and semantic analysis. AI tools can examine page layouts, compare the positioning and consistency of navigational elements, and even interpret whether visual breadcrumbs or menu highlights align with the site’s hierarchy. By recognizing design patterns and visual cues, AI can identify subtle malalignments,like inconsistent breadcrumb formatting or missing visual states, that automation alone might miss. It acts as a bridge between code-level detection and user-perceived experience.

Finally, manual testing validates that all these indicators genuinely communicate a user’s position within the site. Human testers use keyboards, screen readers, and visual inspection to confirm that navigation cues are not only present but clear, perceivable, and meaningful. They assess whether page titles reflect hierarchy, breadcrumbs use logical naming, and highlighted menu items accurately correspond to the current location. The manual phase also evaluates the overall user journey, how easily a user can backtrack, predict their next step, or reorient after navigating deep into the site.

Together, they form a continuous cycle: automation ensures consistency at scale, AI adds contextual depth, and human evaluation confirms authenticity. This fusion of machine precision and human empathy ensures users not only find where they are, but feel confident in where they’re going.

In the end, testing WCAG 2.4.8 isn’t just about meeting a guideline. It’s about designing experiences that are intuitive, navigable, and empowering. That’s what true accessibility, and great user experience, looks like.

Related Resources

- Understanding Success Criterion 2.4.8 Location

- mind the WCAG automation gap

- Providing a breadcrumb trail

- Providing a site map

- Indicating current location within navigation bars

- Identifying a web page’s relationship to a larger collection of web pages

- Providing running headers and footers in PDF documents

- Specifying consistent page numbering for PDF documents