Note: The creation of this article on testing On Input was human-based, with the assistance on artificial intelligence.

Explanation of the success criteria

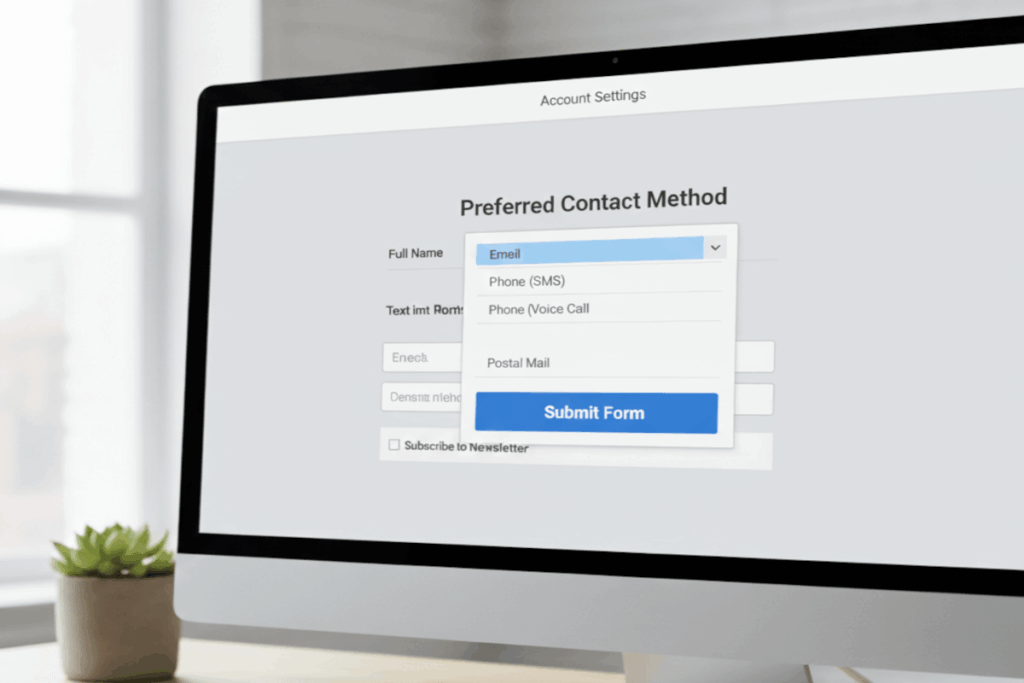

WCAG 3.2.2 On Input is a Level A conformance level Success Criterion. It is designed to preserve user control and prevent confusion caused by unexpected changes when interacting with input elements such as form fields, dropdowns, and checkboxes. The core objective is to ensure that users are never surprised or disoriented by automatic actions triggered by their inputs, such as instant form submissions, redirects, or focus shifts.

For example, a selection change in a form should not immediately submit the data or alter the page without explicit user confirmation. Instead, meaningful context changes, like navigation, content updates, or dynamic interface adjustments, should occur only after users are informed and can consent to them. By upholding this principle, designers and developers create experiences that are predictable, trustworthy, and fully accessible, particularly for users who depend on consistency to navigate effectively.

Who does this benefit?

- People with cognitive or learning disabilities who may become confused or disoriented when the page changes unexpectedly after entering or selecting information.

- People with motor disabilities who may accidentally trigger input changes and struggle to recover if those changes cause unexpected navigation or context shifts.

- People with visual impairments or blindness who rely on screen readers and need predictable interactions to understand what’s happening on the page.

- People using speech recognition software who might unintentionally activate a control through a spoken command and need the opportunity to confirm actions.

- All users who benefit from predictable, consistent, and controllable user interfaces that don’t cause sudden loss of work or navigation context.

Testing via Automated testing

Automated testing provides scalability and efficiency, offering a first line of detection for potential violations of WCAG 3.2.2. Automated tools can rapidly scan web applications to locate technical triggers, such as onchange, onclick, or onsubmit handlers, that could cause a change of context. This broad coverage is valuable for identifying high-risk patterns early in the testing process. However, automation’s strength is also its limitation: while it detects the presence of event triggers, it cannot discern intent or user experience. Automated tools do not understand whether a triggered change is disruptive or expected, resulting in false positives and missed issues in complex, dynamic environments.

Testing via Artificial Intelligence (AI)

AI-based testing introduces a layer of intelligence that bridges the gap between raw code analysis and user experience prediction. Using behavioral modeling, heuristic analysis, and data-driven learning, AI can simulate user interactions to determine whether specific input behaviors may cause confusion or disorientation. AI systems can recognize patterns where context changes may be acceptable, such as form validation feedback, or where they likely violate user expectations. By prioritizing the most critical risks and even recommending design or behavioral fixes, AI enhances both efficiency and accuracy. Yet, the technology is not infallible. AI’s insight depends on the diversity of its training data and its ability to interpret real-world intent. It can misjudge unconventional interaction designs or novel interface components that deviate from typical patterns.

Testing via Manual testing

Manual testing remains the cornerstone of accessibility validation and the ultimate arbiter. Human testers can evaluate intent, predictability, and communication in ways that no algorithm can fully replicate. By directly interacting with forms, menus, and input-driven components, using tools like screen readers or keyboard navigation, testers assess whether users receive sufficient cues before any change of context occurs. Manual testing verifies focus behavior, warning messages, and the clarity of transitions, ensuring that content behaves consistently and intuitively. Although manual testing demands expertise and time, its qualitative insights are unmatched. It captures the lived experiences of users, ensuring accessibility in both technical and human terms.

Which approach is best?

An effective hybrid approach to testing WCAG 3.2.2 On Input integrates automated, AI-based, and manual testing to achieve both breadth and depth in evaluating how user inputs affect context changes.

The process begins with automated testing, which scans the entire site or application for potential triggers that may cause unexpected behavior. These include event handlers such as onchange, onclick, or onsubmit attached to input fields, dropdown menus, or checkboxes. Automated tools excel at identifying these technical indicators across large codebases, providing a broad inventory of potential problem areas. However, since automation alone cannot interpret user intent or the impact of the interaction, its results serve primarily as a foundation for deeper analysis.

Next, AI-based testing refines these findings by introducing context awareness. AI systems can simulate user interactions to predict whether an action, like selecting a dropdown option, causes a disorienting or unanticipated page change. Through behavioral modeling and data-driven heuristics, AI evaluates whether context changes align with user expectations and design conventions. It can prioritize the most likely violations, differentiate between acceptable and problematic changes, and even recommend adjustments such as adding confirmation dialogs or visual cues. This stage bridges the gap between technical detection and experiential assessment, helping testers focus their manual reviews where they matter most.

Finally, manual testing delivers the definitive evaluation of compliance and usability. Experienced testers validate AI findings by interacting directly with the interface using a range of assistive technologies, including screen readers and keyboard navigation. They assess whether changes in context are clearly communicated, whether focus remains predictable, and whether any input-driven behavior could confuse or disrupt users. Manual testing also ensures that remediation aligns with user expectations and accessibility best practices, providing a human-centered layer of assurance.

By combining the efficiency of automation, the contextual intelligence of AI, and the judgment of human expertise, this hybrid methodology delivers a comprehensive assessment of WCAG 3.2.2 compliance. It ensures not only that technical issues are detected and prioritized efficiently but also that the overall user experience remains consistent, predictable, and accessible for all users.

Related Resources

- Understanding Success Criterion 3.2.2 On Input

- mind the WCAG automation gap

- Providing a submit button to initiate a change of context

- Providing submit buttons

- Using a button with a select element to perform an action

- Providing submit buttons with the submit-form action in PDF forms

- Describing what will happen before a change to a form control that causes a change of context to occur is made

- Using an onchange event on a select element without causing a change of context