Note: The creation of this article on testing Page Titled was human-based, with the assistance of artificial intelligence.

Explanation of the success criteria

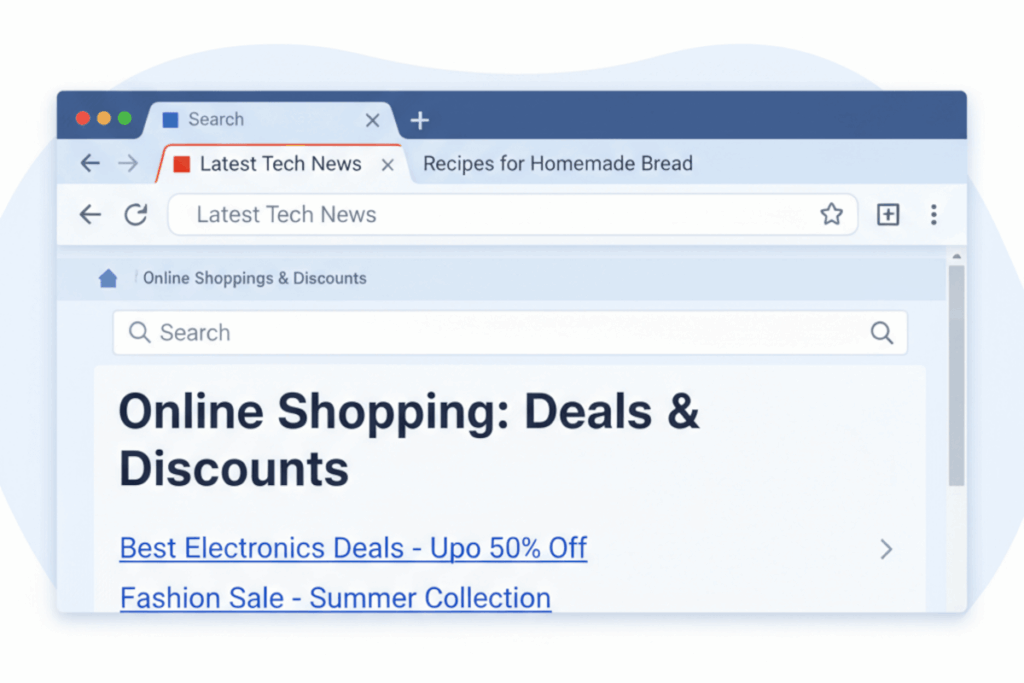

WCAG 2.4.2 Page Titled is a Level A conformance level Success Criterion. It may seem simple on the surface, but it’s one of the most foundational aspects of accessible design. Every page needs a clear, descriptive title that accurately reflects its purpose and content. For users of assistive technologies, especially screen readers, page titles are often the very first cue about where they are. For everyone else, they’re a navigational anchor that keeps experiences coherent across tabs, windows, and devices. A strong title sets the stage for clarity, usability, and trust. It’s not just about compliance; it’s about giving users confidence that they’re in the right place.

Who does this benefit?

- Screen reader users rely on page titles to understand a page’s purpose immediately after it loads.

- Users with cognitive or memory impairments benefit from clear titles that help maintain orientation and reduce confusion.

- Keyboard-only users can navigate more efficiently when each page is clearly identified.

- Users with limited mobility save time and effort by quickly recognizing the purpose of each page.

- Mobile users and multitaskers can easily identify and return to the correct tab or window.

- All users gain from improved clarity, navigation, and organization across multiple pages.

Testing via Automated testing

Automated testing is the logical first step; it’s fast, scalable, and precise when it comes to verifying that a element exists and is programmatically detectable. It can comb through vast websites to catch missing or empty titles, providing a solid foundation for compliance. Yet, automation alone can’t determine whether a title is good, whether it’s meaningful, distinctive, or contextually accurate. It also struggles with dynamic content and complex single-page applications, where page titles evolve with user interactions. In other words, it identifies what’s there, but not whether it works.

Testing via Artificial Intelligence (AI)

AI-based testing adds a new layer of intelligence by examining title quality in relation to a page’s content. It can recognize patterns, flag vague or repetitive titles, and detect inconsistencies across large content ecosystems. For example, it might spot that too many pages are titled simply “Home” or “Products,” prompting designers to refine them for clarity. However, AI still lacks the depth of human understanding, it may misread context, intent, or brand tone. While it excels at identifying potential issues at scale, it still needs human judgment to confirm and interpret its findings.

Testing via Manual Testing

Manual testing remains the gold standard for assessing the user experience of page titles. A skilled reviewer can determine if titles truly reflect each page’s purpose, differentiate similar content, and help users stay oriented, especially in complex flows like e-commerce checkouts or administrative dashboards. Human testers bring empathy and contextual awareness that machines can’t replicate. The trade-off, of course, is time and scalability. Manual testing can be resource-intensive, particularly for large or frequently updated sites, but it provides the most authentic measure of usability and accessibility.

Which approach is best?

No single approach for testing Page Titled is perfect. The most effective approach to testing Page Titled marries the strengths of automation, AI, and human insight.

The process begins with automated testing, which scans every page across the site to confirm that a <title> element exists and is programmatically exposed. This step ensures comprehensive coverage and quickly identifies missing, empty, or duplicate titles, issues that are easy to fix and essential for basic accessibility. Once those structural checks are complete, AI-based testing refines the analysis by examining the quality of the titles in context. AI tools can compare each page title against visible content, metadata, and headings to identify vague or non-descriptive titles, flag inconsistencies, and detect patterns such as repetitive or overly generic labeling. This intelligent filtering helps prioritize where human review is most valuable. Finally, manual testing validates the results through a usability lens, ensuring titles accurately reflect purpose, context, and intent. Testers review whether titles meaningfully distinguish similar pages, align with user expectations, and improve orientation for people navigating via assistive technologies.

By integrating these methods, organizations can achieve both efficiency and depth. Automation brings speed, AI adds intelligence, and human evaluation delivers empathy and accuracy. Together, they ensure page titles aren’t just compliant with WCAG, they’re meaningful, contextual, and truly user-centered, reinforcing a digital experience that’s both accessible and intuitive.